Very often, issues reported by our clients are caused by incorrect/corrupted entries in the tables (which store the data). In such cases we usually ask to export and send us these tables for investigation. It is always possible to quickly export a table by using DataPump’s export script or Earthlight’s Export tool, however these tools allow you to export data to specified formats (shp, tab, csv etc.). Our data exporting engines are very often fixing incorrect values and we are no longer able to reproduce the issue by using provided data. We strongly suggest to create SQL dump of corrupted table because only this method guarantees that the data are exported in exact same format/value as they are stored in your database.

To generate a dump file by using the Sqldumper.exe utility, follow these steps: Open the following folder: SQLServerInstallDrive: Program Files Microsoft SQL Server number Shared. Note In this folder path, number is a placeholder for one of the following: 140 for SQL Server 2017.

- Click Start, and then click Control Panel. Double-click System, and then click Advanced system settings. Click the Advanced tab, and then click Settings under Startup and Recovery. In the Write debugging information list, click Small memory dump (64k).

- Nov 08, 2017 File with “LOG” extension is a snippet of ERRORLOG file when the dump was generated. This would help someone to look at exactly Errorlog part when the dump was generated. File with “MDMP” extension (called as minidump file) is a small memory footprint of the SQL process when the issue happened.

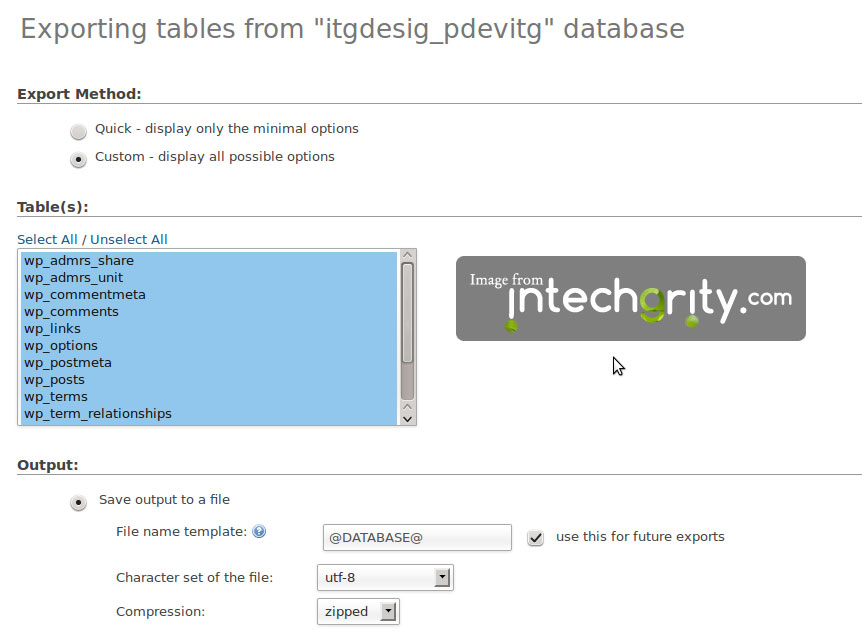

To create SQL dump of desired table please follow these steps.

- Run SQL Server Management Studio

- Connect to your database server.

- Once you are connected you will see a list of all databases (on the left panel). Please find the database on the list which store the table that you want to export.

- Now right-click on the database and in context menu please go to Tasks section and select Generate Scripts option.

- Now you should see Generate and Publish Scripts tool dialog window. Please click Next button on the initial screen.

- This is a Select the databases object to script screen. If you want to export just one table please do the following steps:

- click Select specific database object option

- click small + icon next to Tables to expand tables list

- find desired table on the list and tick the checkbox next to table name.

- if you are happy with the settings – click Next button

- Now you need to specify all settings for the file that you want to generate. Please make sure that your settings are the same as on the image below. Set name and path for the file and click Advanced button.

- In Advanced Scripting Options window scroll down and find ‘Types of data to script’ option and set it to ‘Schema and data’. Now click OK to accept the settings and click Next to continue.

- Now you will see a Summary window. There is no need to set/change anything here. You can just check if all options are correct. If you are happy with the settings – click Next button.

- The script is now being created. This operation may take a while (depends on the table size). Once it is completed you should see a confirmation screen similar to the one below. Click Finish button to close the dialog window.

- Please go to location specified in settings and check if the file is there.

- Congratulations! You have just created SQL dump file. You can now send it to our support team (please remember that you can create ZIP archive to reduce the file size).

Because Data Pump is server-based rather than client-based, dump files, log files, and SQL files are accessed relative to server-based directory paths. Data Pump requires that directory paths be specified as directory objects. A directory object maps a name to a directory path on the file system. DBAs must ensure that only approved users are allowed access to the directory object associated with the directory path.

The following example shows a SQL statement that creates a directory object named dpump_dir1 that is mapped to a directory located at /usr/apps/datafiles.

The reason that a directory object is required is to ensure data security and integrity. For example:

Sql Dump File Reader Online

If you were allowed to specify a directory path location for an input file, then you might be able to read data that the server has access to, but to which you should not.

If you were allowed to specify a directory path location for an output file, then the server might overwrite a file that you might not normally have privileges to delete.

On UNIX and Windows operating systems, a default directory object, DATA_PUMP_DIR, is created at database creation or whenever the database dictionary is upgraded. By default, it is available only to privileged users. (The user SYSTEM has read and write access to the DATA_PUMP_DIR directory, by default.)

If you are not a privileged user, then before you can run Data Pump Export or Data Pump Import, a directory object must be created by a database administrator (DBA) or by any user with the CREATEANYDIRECTORY privilege.

C# Sql Reader

After a directory is created, the user creating the directory object must grant READ or WRITE permission on the directory to other users. For example, to allow the Oracle database to read and write files on behalf of user hr in the directory named by dpump_dir1, the DBA must execute the following command:

Note that READ or WRITE permission to a directory object only means that the Oracle database can read or write files in the corresponding directory on your behalf. You are not given direct access to those files outside of the Oracle database unless you have the appropriate operating system privileges. Similarly, the Oracle database requires permission from the operating system to read and write files in the directories.

Data Pump Export and Import use the following order of precedence to determine a file's location:

If a directory object is specified as part of the file specification, then the location specified by that directory object is used. (The directory object must be separated from the file name by a colon.)

If a directory object is not specified as part of the file specification, then the directory object named by the

DIRECTORYparameter is used.If a directory object is not specified as part of the file specification, and if no directory object is named by the

DIRECTORYparameter, then the value of the environment variable,DATA_PUMP_DIR, is used. This environment variable is defined using operating system commands on the client system where the Data Pump Export and Import utilities are run. The value assigned to this client-based environment variable must be the name of a server-based directory object, which must first be created on the server system by a DBA. For example, the following SQL statement creates a directory object on the server system. The name of the directory object isDUMP_FILES1, and it is located at'/usr/apps/dumpfiles1'.Then, a user on a UNIX-based client system using

cshcan assign the valueDUMP_FILES1to the environment variableDATA_PUMP_DIR. TheDIRECTORYparameter can then be omitted from the command line. The dump fileemployees.dmp, and the log fileexport.log, are written to'/usr/apps/dumpfiles1'.If none of the previous three conditions yields a directory object and you are a privileged user, then Data Pump attempts to use the value of the default server-based directory object,

DATA_PUMP_DIR. This directory object is automatically created at database creation or when the database dictionary is upgraded. You can use the following SQL query to see the path definition forDATA_PUMP_DIR:If you are not a privileged user, then access to the

DATA_PUMP_DIRdirectory object must have previously been granted to you by a DBA.Do not confuse the default

DATA_PUMP_DIRdirectory object with the client-based environment variable of the same name.